Khoury News

25 in ’25: Record number of Khoury research apprentices showcase work in Boston

From household robots and secure web browsing to melanoma detection and radar, Khoury College’s master’s apprentices truly ran the gamut this spring.

In Khoury College’s Research Apprenticeship Program, master’s students research a topic of their choice for one semester under the guidance of a faculty advisor. This spring, a record 25 apprentices presented their work in Boston, with topics ranging from antibody structure predictions to government censorship to AI’s persuasion abilities.

To learn more about the researchers and their work, click any of the linked names below, or simply read on.

Abhijeet Chowdhury

Applying Tiling Theory to Extend Art Made from Gen AI

Throughout history, ornamental patterns have been a fundamental part of art and have appeared in countless cultures. Abhijeet Chowdhury, advised by Crane He Chen, used symmetrical grouping to try to create these patterns using generative AI. Chowdhury explained that the work aims to “mathematically recreate patterns and then use AI models to create different artistic patterns.”

Chowdhury used Adobe Illustrator and pattern generators to create the patterns, then a tiling matrix to render them. These methods produced unique patterns, which Chowdhury hopes will put a new spin on traditional ornamental patterns.

Andrea Pinto

Antibody Structure Prediction: Enhancing Boltz with Chai-1

Antibodies play a vital role in protecting us from disease. Andrea Pinto’s research, guided by Wengong Jin, focuses on improving the accuracy of AI models in predicting antibody structures.

AlphaFold 3 is a state-of-the-art AI model that is known for its ability to predict protein structures with high precision. However, because AlphaFold 3 is not fully open-source, Pinto worked with alternative models like “Boltz” and “Chai-1” that are openly available and deliver comparable performance. She used Chai-1 to generate refined structures of antibodies from Boltz’s training set and is retraining Boltz using those predictions — creating a form of “AI self-distillation loop” where Chai-1 acts as the teacher and Boltz the student.

Pinto explained that “This matters, because if we can accurately predict antibody structures using AI, we can speed up the design of life-saving therapies. Antibodies are central to both vaccine and drug development.”

Ankit Sinha

Roles of State in Asymmetric Partially Observable Reinforcement Learning

In computer science, asymmetry means that data speeds or functions do not match. For example, when people use the internet, they download more than they upload. This concept is important for online security when multiple users are accessing a single system. However, researchers don’t fully understand why state-based asymmetry helps protect data. Ankit Sinha’s research, advised by Chris Amato, aims to better understand how asymmetry functions during offline training for systems.

"The main goal of the research is to identify this role of the states,” Sinha said.

Sinha used different environments to create fixed policies, then trained models using those policies. He then analyzed the results to figure out which training methods were faster. The results and conclusions are still in progress, but Sinha plans to further study bias variances and reinforcement learning once those conclusions are drawn.

Bhavana Rajan Nair

Leveraging LLMs for Extracting Medical Insights from Online Discussions

Although they’re not the most reliable, online discussion posts have become a popular source for people seeking medical advice. Bhavana Rajan Nair, guided by Deahan Yu, is using large language models (LLMs) to analyze data found on online discussion boards to help users better understand information. Specifically, Nair’s research focuses on identifying adverse drug effects — unintended consequences possibly caused by drugs.

This was challenging, Nair noted, because “it is so nuanced. Unlike structural clinical records, online discussion boards are full of unstructured data.”

The study found that human annotations of these discussion posts were more comprehensive than LLM annotations. In the future, Nair hopes to “continue this research as my graduate thesis, where we would be looking to fine tune these models on the datasets we already prepared.”

Chirag Malhotra

OLLIV: Open Language-Image Input Variation

Vision language models (VLMs) are AI models that understand and connect images with text. These models are helpful for describing images to visually impaired users, and more generally for anyone searching for images. But despite this popularity, not much research has been done on VLM adherence to specific encoded rules within them, which is what Chirag Malhotra focuses on.

Advised by Saiph Savage, Malhotra explained that he’s “trying to understand how inference-following capabilities of large language models can be compared with each other and ordered.”

In his study, Malhotra prompted VLMs with different instructions, asking them to summarize and describe an image. Malhotra observed that the models described photos less accurately when they had to provide simple answers. In the future, Malhotra hopes to continue this research and fine-tune his methods to make the project more scalable.

Haibo Zhao

Hierarchical Equivariant Policy via Frame Transfer

Haibo Zhao has ambitions for household robots that extend well beyond the Roomba. He wants to train robots to do everyday household chores by having them observe humans and learn from them. To do this, Zhao uses a hierarchal algorithm policy based on concepts of hierarchies and equivalencies.

"If we want to pick up this phone, we first figure out a sub-goal: pick up the phone. And then we use a low-level agent to generate a trajectory for the robot to pick it up,” explained Zhao, who is advised by Robert Platt. “But once the phone rotates, we want the grasp poses to rotate, so we evaluated our model on many simulation experiments.”

Eventually, Zhao said, these methods can be used to train robots to do a variety of tasks, both around the home and outside it.

Harshith Umesh

Optimizing Refraction Proxy Deployment

In countries where governments censor what citizens can access online, many users turn to refraction networking (RN), a technique that hides traffic to blocked websites inside ordinary internet activity. These covert proxies are placed within transit networks known as autonomous systems (AS) that naturally carry global traffic, making them harder to detect or block.

Harshith Umesh, working under the guidance of Alden Jackson, wanted to identify the most strategic networks for deploying RN proxies on a global scale. To explore this, he constructed a censorship-aware internet topology model (AS + IXP multi-graph) that captures both public and previously underrepresented network relationships. Using this graph, Umesh analyzed Domain Name System resolution paths across the five most censored countries, drawing from nearly 500 million data points.

“We’re trying to compare how many refraction networking proxies we would need to place in unique autonomous systems to achieve 25%, 50%, and 75% coverage,” he explained.

This research presents a scalable, data-driven framework for evaluating and improving censorship circumvention, helping activists and developers design more resilient and targeted systems for safeguarding internet freedom.

Jiawen Cai

An Interactive Platform Evaluating LLMs' Persuasive Abilities

As AI infiltrates more and more aspects of our everyday lives, Jiawen Cai, advised by Weiyan Shi, is trying to answer a few questions. How effectively can AI models persuade a human? What strategies lead to a successful AI persuasion? Can AI dynamically adopt persuasive techniques? Do personal backgrounds influence how persuasion works?”

To evaluate AI models, Cai fed them a claim, then had them build a persuasive argument for it. Human users then voted on which claim persuaded them more.

This research matters, Cai said, because “the advancements of LLMs have changed people's decision-making abilities.” The goal, she added, is to create a benchmark for evaluating AI persuasion and exploring the ethical questions that surround AI communications.

Junchao Zhu

A Testing Framework for Distributed Systems

Distributed systems — groups of computers in different locations that work together — are all around us. Google Search, Netflix, and most banks use these systems to process and store information. Junchao Zhu, advised by Cristina Nita-Rotaru, explored how these systems get hacked and how they can be made to behave while under attack.

“Testing distributed systems is very difficult compared to applications that run on one single device,” Zhu noted. “A distributed system consists of multiple nodes that are running asynchronously, and every node may be in different states.”

To tackle this complexity, Zhu developed testing modes to find and locate bugs. This requires “handling and manipulating every single message from the system that is flowing around between the nodes and tracking the behaviors of the systems,” he said.

Zhu is still developing the testing programs and hopes to continue this research in the coming months.

Katie Elyse Song

Better Passwords Through Oblivious Pseudorandom Function

Most websites and interfaces require passwords, but many of these websites are susceptible to brute force attacks and data breaches.

This is an issue, said Katie Elyse Song, and traditional methods like hashing — turning passwords into random characters in databases so hackers can’t access the actual passwords — are not adequately protecting user passwords.

“Even hashed passwords,” Song explained, ‘pose a risk of leak, showing the need for more secure private solutions like OPRFs.”

OPRF stands for oblivious pseudorandom function, which is a tool that blocks servers from seeing the actual password input, making the data more secure. Song, advised by Ariel Hamlin, used OPRF to separate the web server and the key server, protecting the passwords. Moving forward, Song wants to make this method more scalable.

Keivalya Bhartendu Pandya

Benchmarking Robot Learning Algorithms for Real-World Challenges

If robots are increasingly available, Keivalya Bhartendu Pandya found himself asking, why aren’t more people using them in daily life? To attempt to remedy this issue Pandya, under the guidance of Zhi Tan, analyzed and ranked different robot training methods, with the hope of increasing the user-friendliness of robots for everyone. This research is challenging, Pandya noted, because the lab and the real world are quite different.

“The lab is very controlled, predictable, and static, whereas the real world is noisy, dynamic, and very unpredictable,” explained Pandya.

So far, Pandya has observed different learning algorithms, such as perception, imitation learning, and vison language action. He hopes that with continued research, robots will become more common and trainable.

Kiersten Grieco

User Data Collection and Profiling in Smart TVs and Fridges

When smart devices are designed to be as seamless as possible, it’s easy for users to overlook the privacy implications they present. So, Kiersten Grieco, under the guidance of David Choffnes, explored how these devices collect data and how that data is shared.

Grieco focused on top brands like Roku, Google Chromecast, Apple TV, and more, and tried to determine how these technologies share data with third-party websites. To do this, Grieco explained, she “identifies who the device contacts, then submits data requests to companies using methods outlined in their privacy policies to see what information they're collecting."

Most companies have yet to respond, and the ones that did claimed they were not collecting data. However, Grieco observed the devices contacting third-party organizations, revealing evidence of data-sharing, and thus gaps in the information reported by the companies.

In the future, Grieco said, she hopes to “investigate contents of data packets being sent via man-in-the-middle techniques, examine user data obtained from the third parties, and further investigate how it's being distributed across the internet by third parties.”

Lahari Boni

Building Technologies to Enhance AI Literacy Among Blind and Low Vision Students

The recent influx of AI education tools has primarily benefitted sighted learners, Lahari Boni said, at the expense of blind and low-vision students.

“Imagine trying to learn and understand how ChatGPT, for instance, works without being able to see the screen,” Boni said.

Boni, advised by Maitraye Das, focuses on designing AI literacy toolkits for blind and low-vision students, with audio-based, interactive, gamified, and personal-feedback-based features.

“Everything is voice based, so the AI and then the LLM explain to students how different models work, using verbal and visual analogies,” Boni noted.

In the future, she hopes to expand the toolkit and conduct user studies to see how these techniques play out in the real-world.

Ling Liu

Spacetime Volumes for StarCraft Gameplay Visualization

In traditional 2D video game maps, little spatial information is provided, which means the maps display where actions happen, but not when. Ling Liu, guided by Seth Cooper, aims to generate 3D maps that include more spatial information; this requires parsing through replay files for data, cleaning and filtering the data, and unifying frames from the data to construct maps. Liu also introduced time as a third axis, allowing for the use of power shifts and the inclusion of temporal information.

In the future, Liu hopes to “refine [the study’s] results, review game features or player strategies, and help game researchers do a deeper game analysis or game AI training.”

Nayonika Sen

A Research Tool for LLM Internals

While large language models have become very popular, many users do not understand how they actually work. Nayonika Sen’s research, advised by David Bau and Arjun Guha, aims to refine a tool she developed that will give researchers more insights into how the models operate.

National Deep Inference Fabric, a $9 million research project housed at Northeastern University and led by Bau, has served as the foundation of the project, Sen said.

“NDIF is a research computing project that enables us to crack open any of these mysteries of these large-scale AI systems,” she said, “We can build models that enable us to further investigate the black box of AI.”

Moving forward, Sen hopes to refine her tool to accommodate larger AI models and more tasks. This is important, Sen noted, because “it unlocks new opportunities for AI research and development. This work is just the beginning.”

Neel Sortur

Shape Inference from Radar Signals Using Differentiable Inverse Methods

Determining 3D shapes based on radar images is difficult but necessary when it comes to, for example, autonomous driving. Neel Sortur’s research, aided by Robin Walters, aims to create a new model to understand the distribution of shapes and increase the vision capabilities of radar-enabled devices. Noise, which is essentially a distraction in the environment, can make this work difficult.

“Oftentimes in applications you have noise from the environment coming into your sensors, and in an application like autonomous driving, you can imagine you only see the front of a person that's crossing a crosswalk,” he explained.

By using physical optics simulations to model electromagnetic scattering and generate shapes, Sortur is trying to empower models to better predict and understand the world around them. This, he hopes, will improve device safety and capability in industries like aerospace and autonomous vehicles.

Nihira Golasangi

Deep Clustering for Mass Spectrometry Imaging

Mass spectrometry imaging (MSI) is a technique used to explore molecules, one that helps scientists discover different proteins and gain insight into proteins. Nihira Golasangi, advised by Kylie Ariel Bemis, focuses on creating models that work with MSI to help process data.

“This package makes it easier [compared to traditional methods] for the user to cluster data, and because we ended up disintegrating the training of autoencoder and convolutional neural networks, the user has more control over the process,” she said.

Golasangi’s research has the potential not only to benefit medical researchers attempting to discover biomolecules and map relationships between them, but also to produce “adaptable and individual solutions for different real-world applications,” she explained.

Niraj Chandrakant Chaudhari

GenZymes: De Novo Enzyme Generation with RFDiffusion

Proteins are chains of amino acids encoded with DNA, and understanding their structure is key for bioengineering and drug discovery. By applying diffusion-based AI methods, Niraj Chandrakant Chaudhari is trying to understand the design of these proteins.

“With this technique, we can generate proteins with specific shapes or functions in mind,” said Chaudhari, who is advised by Predrag Radivojac. “For example, we can generate a symmetric protein or a protein with specific binding target or a specific unit.”

To do this, researchers must create a protein structure, predict amino sequences based on that structure, and compare each AI model’s accuracy against other models.

This work “is not complete yet,” Chaudhari said. “We want to apply this technique on a special class of protein: enzymes.”

Pavan Kumar

User-Led Data Minimization with LLMs to Navigate Privacy Trade-offs in Chatbots

Although many users interact with AI models in personal ways and disclose tons of information, they often do not understand how AI deals with private data. Pavan Kumar, advised by Tianshi Li, wants to know how users can protect that data.

“Last month, I was filing my taxes, and I shared all my financial details to ChatGPT, and there's nothing wrong with that. Most of us do it because there's a sort of trust that forms with chatbots,” Kumar noted. “The problem is that users have zero visibility and control over how the data is handled. The core issue is how we can make the privacy experience more user led.”

To do this, Kumar generated synthetic prompts to test AI models and developed tools that detect when private data is being shared and stored. After these tools detect privacy data, they alert users and let them decide how to proceed. Kumar said he hopes that he can make these tools easier to use and more accessible.

“We're going to make this smaller and lighter so it can run on edge devices as well,” he said. “Right now, it works on laptop, but it takes a lot of performance and RAM.”

Pranav Boditalupula Sivasankara Reddy

Understanding Human Behavior in Social Engineering Attacks

Social engineering attacks, where users receive emails or texts meant to make them share sensitive information, are increasingly common. Pranav Boditalupula Sivasankara Reddy, guided by Sarita Singh, explores the human element that makes some users more susceptible to certain attacks.

“Attackers usually craft their messages to manipulate human psychology, invoking certain emotions like fear, trust and urgency to trick users into clicking on harmful links and giving away sensitive data,” Reddy noted.

Reddy explored which emotions and psychological triggers, such as guilt or generosity, appear to make victims more susceptible to such attacks. In the future, Reddy hopes to continue exploring the topic by using human subjects. After that, he wants to launch a machine learning model that predicts how susceptible someone is to these attacks, and that provides feedback and simulations to teach people how to resist.

Pruthvi Prakash Navada

An Exploration of Dynamic Reconfiguration Protocols in etcd

Distributed consensus protocols involve different systems agreeing on a single value or decision, even if some parts of the system fail or information is delayed.

“These systems are essential for maintaining the consistency of data," said Pruthvi Prakash Navada, who is attempting to make it easier and more scalable to reconfigure these protocols.

Navada, advised by Ji-Yong Shin, said the study relies on RAFT, a common distributed consensus protocol.

“Why is reconfiguration important?” he asked. “Because we want our systems to be highly available, scalable, and cost-effective.”

Navada aims to simplify the reconfiguration processes to make the protocols more efficient and to eliminate single-point-of-failure opportunities that crash systems. He also wants to explore automatic reconfiguration systems and possibly develop a performance testing framework to compare approaches.

Ronhit Neema

Transparent Checkpointing: A New Model for Hybrid HPC Computations

Checkpointing — a program periodically saving its state so it can be resumed from that state after crashing — is a fundamental concept in computer science. Ronhit Neema compares it to a video game: “You make some progress, then you stop for a while and do your own work, then you come back to your console and start where you left. You don't have to start from scratch.”

Neema, advised by Gene Cooperman, attempted to combine the checkpointing tool MANA with DMTCP (distributed multithreaded checkpointing) to “expand the portability and stability so MANA can run on multiple platforms” — making it easier to use. This is important, he said, so users can checkpoint and restart even with long and complicated codes, and without having to recompile things.

Stefanie Colino

A Landscape Study of Undergraduate AI Programs

Access to AI programs and resources varies greatly across educational institutions. Stefanie Colino, advised by Carla Brodley and Felix Muzny under the umbrella of the Center for Inclusive Computing, researched how different AI undergraduate programs are structured and who can access them.

This area, Colino noted, has not been studied much before, but is important to understand because “we want to ensure that everyone has access to the quality education needed to go out and be successful.”

To research undergraduate institutions and their AI programs, Colino used a program that “automatically searches the internet for program requirements, downloads the requirements as a PDF, then puts that into a data extractor.” She noted that this ongoing work is crucial for understanding not just the structure of AI programs, but also the outcomes of the students that populate them.

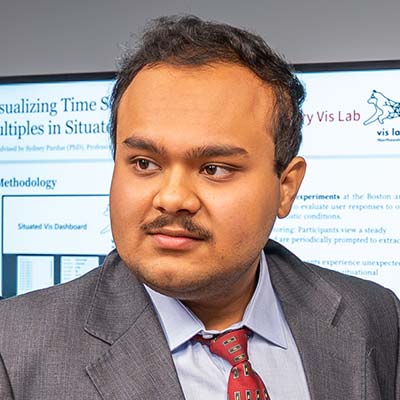

Tejas Mohan Karkera

Leveraging Hybrid Variants of CNN and ViT for Melanoma Detection

Melanoma is a highly aggressive form of skin cancer that can be hard to detect, but advancements in deep learning might be able to help. Tejas Mohan Karkera, advised by Divya Chaudhary, is attempting to combine convolutional neural networking with vision transformers to detect melanoma earlier.

To test these models, Karkera employed different data processing techniques, then ranked them based on their effectiveness. Karkera displayed the models, noting that they are best used when applied visually.

“The model is looking to predict if it's a benign or a malignant image and you can see that the best performing model is the one that focuses on the cancer cells more accurately than anything else,” he said.

Karkera hopes this research will help doctors diagnose patients more quickly, ensuring more successful recoveries.

Vedant Rishi Das

SmeLLM: Automatic Code Smell Identification and Refactoring Using LLMs

Code smells, despite their name, are not actual smells. They’re signs that something might be wrong with a code’s structure or design, even if the code is running properly. At Northeastern, TAs and professors who look at coding-based assignments use their own discretion to analyze students’ code quality, which sometimes leads to grading inconsistencies.

“I wished there was a more standardized evaluation process so that the detected directions are more accurate and more efficient,” Vedant Rishi Das said.

To address this issue, Das, under the guidance of Joydeep Mitra, used a tool based on a large language model to analyze code smells and detect reports, making the whole process more efficient and standardized. The tool generates reports based on possible code smells, and after finding some success with it, Das has high hopes for its implementation.

“We believe that we can use this on actual course submissions from students and grades from teaching assistants to conduct real-world research,” he said.

The Khoury Network: Be in the know

Subscribe now to our monthly newsletter for the latest stories and achievements of our students and faculty